Sundance DSP Research Report

As field programmable gate arrays (FPGAs) and adaptive SoCs evolve, their role in accelerating AI and machine learning (ML) applications becomes increasingly significant. These devices offer high levels of parallelism and flexibility, making them suitable for computationally intensive workloads, particularly in the areas of deep learning and real-time inference. This report compares AMD UltraScale™ and Versal™ architectures, focusing on their suitability for AI tasks, highlighting both their strengths and weaknesses, and exploring the evolution of AI capabilities across these platforms.

1. UltraScale Architecture: Legacy and Initial AI Capabilities

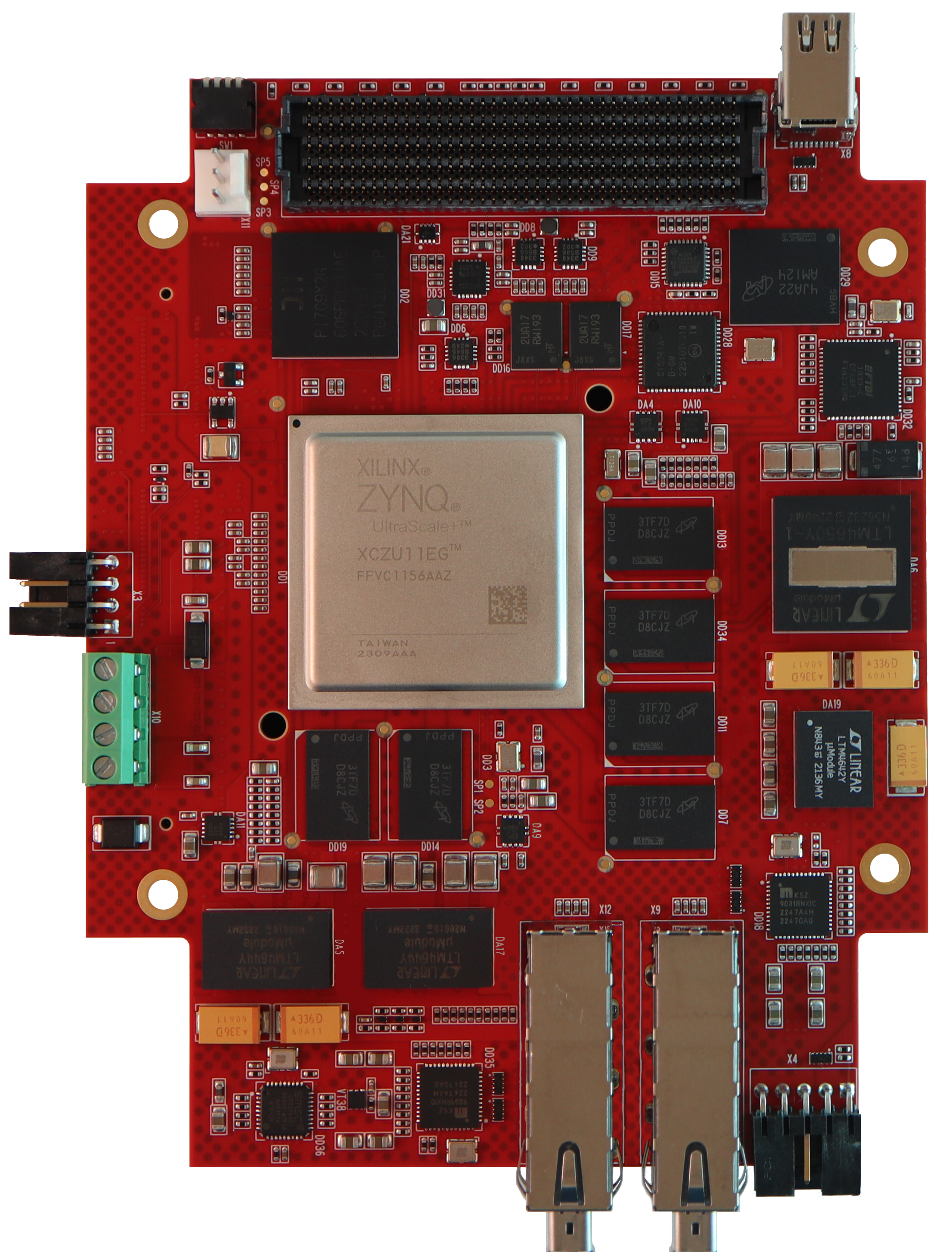

The UltraScale architecture, released in 2014, spans UltraScale FPGAs and UltraScale+ FPGAs and adaptive SoCs. This architecture was a significant milestone in programmable logic technology, delivering speed, power efficiency, and integration improvements compared to its predecessors. UltraScale and UltraScale+ devices are highly flexible, enabling a wide range of applications from high-performance computing (HPC) to telecommunications and, more recently, AI. Despite these advantages, these devices were not specifically optimized for AI workloads, leading to limitations in their AI performance.

AI Capabilities of the UltraScale Architecture

- Parallelism: The fundamental strength of UltraScale and UltraScale+ devices in AI tasks lies in their ability to exploit fine-grained parallelism. With hundreds or thousands of logic gates that can operate concurrently, UltraScale FPGAs can handle matrix operations, convolutions, and other key operations essential for AI and ML models.

- Customizable Hardware Accelerators: One of the most compelling features of UltraScale FPGAs is the ability to create custom hardware accelerators. AI-specific functions, such as data preprocessing or layer-specific neural network operations, can be offloaded onto these programmable hardware blocks, providing tailored acceleration for specific AI tasks.

- High Throughput and Low Latency: UltraScale devices provide high data throughput and low-latency communication, which are key characteristics for real-time AI applications like autonomous vehicles, robotics, and live video analytics.

Limitations of the UltraScale Architecture for AI Workloads

- Absence of AI-Specific Hardware: Despite their flexibility, UltraScale FPGAs lack the specialized hardware components required to accelerate AI workloads fully. For example, they do not include tensor processing units (TPUs) or similar AI-specific accelerators, which makes them less efficient compared to GPUs or other purpose-built AI hardware for deep learning tasks.

- AI Workload Development Complexity: The development tools for UltraScale FPGAs were designed for general-purpose applications, but AI workloads require specialized optimizations. Programming these devices for AI tasks is challenging as it requires in-depth knowledge of both hardware and software, often using low-level hardware description languages like VHDL or Verilog. As a result, the learning curve for developers is steep.

- Limited Framework Support: At the time of their release, UltraScale FPGAs did not offer comprehensive support for AI frameworks such as TensorFlow, PyTorch, or Caffe. Developers could still implement AI models manually, but it was cumbersome and time-consuming compared to the more straightforward GPU-based workflows.

2. Versal Architecture: Refining AI Performance and Developer Experience

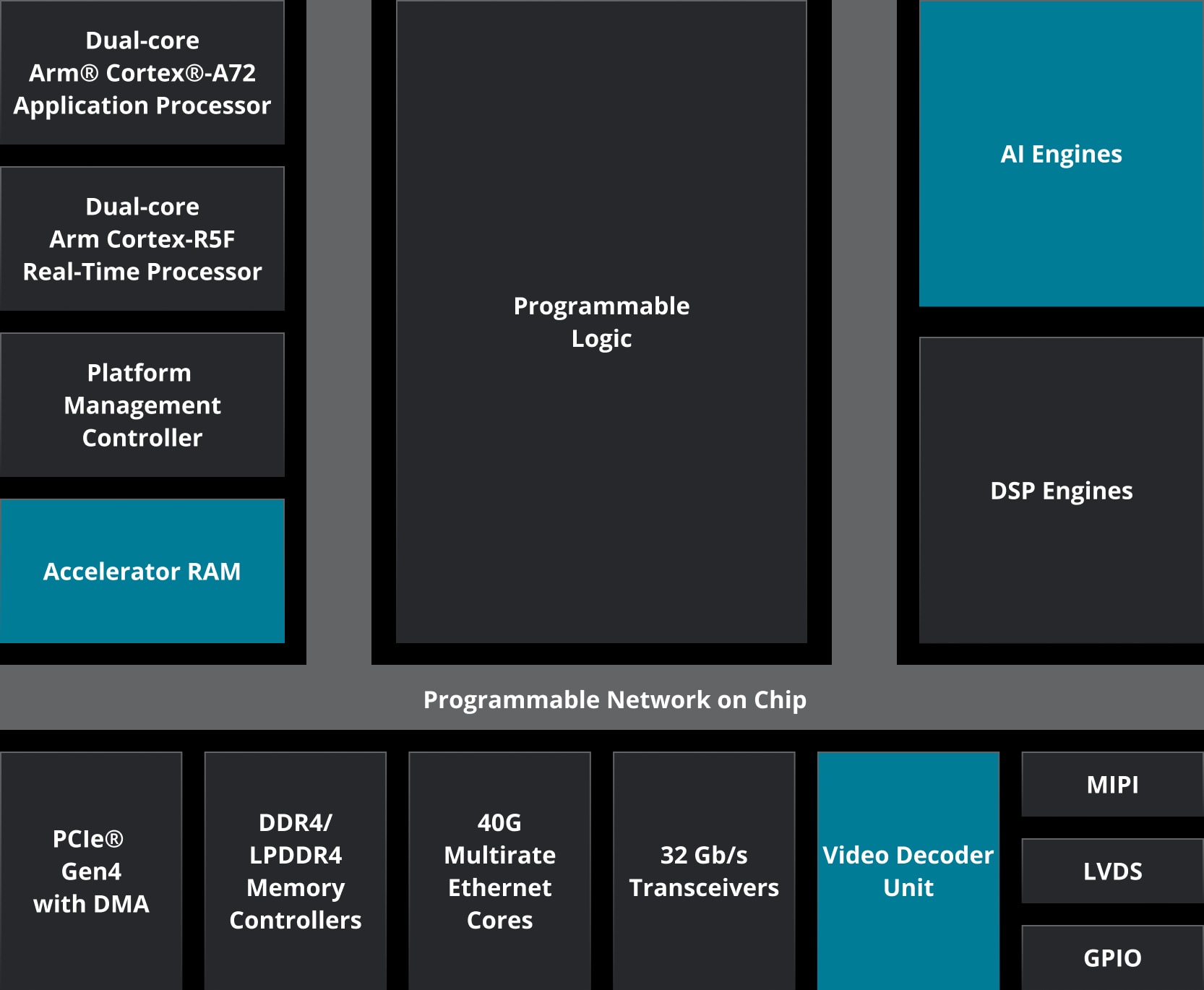

The Versal portfolio, introduced in 2019, represents a significant departure from the UltraScale architecture, targeting AI and ML workloads more directly. The Versal architecture combines multiple types of processors (programmable logic, CPUs, vector processors, and DSP Engines) into a single chip and supports those different compute engines with software-programmable silicon infrastructure. This increased heterogeneity enables Versal devices to provide both the flexibility of FPGAs and the performance optimizations necessary for AI applications.

AI Capabilities of the Versal Architecture

- AI Engines: One of the key innovations of the Versal portfolio is the inclusion of AI Engines—dedicated SIMD (Single Instruction, Multiple Data) processors optimized for high-throughput AI workloads, particularly deep learning inference. These AI Engines provided significant acceleration for operations like matrix multiplication, which are fundamental to AI models, especially in convolutional neural networks (CNNs). The integration of hundreds of AI Engines within a single Versal adaptive SoC enables higher parallelism, making AI inference much faster and more efficient.

- Heterogeneous Compute: The Versal architecture includes programmable logic (PL), scalar processors (CPUs), and DSP Engines in addition to AI Engines. This integration allows for a much more flexible system where specific AI tasks can be offloaded to the most appropriate processor, whether a CPU for control tasks, an AI Engine for data-intensive neural network computations, or programmable logic for custom preprocessing and sensor interfacing. This architectural flexibility makes the Versal portfolio a versatile platform for a wide range of AI and ML applications.

- AMD Vitis™ AI: To improve the ease of adoption for AI workloads, AMD introduced the Vitis AI development stack with the Versal portfolio. The Vitis AI software platform provides pre-optimized libraries and kernel acceleration for deep learning models, which makes it easier to deploy AI models onto adaptive SoCs. This lowers the barrier for developers who are familiar with AI frameworks like TensorFlow and PyTorch but not necessarily with FPGA programming.

Limitations of the Versal Architecture for AI Workloads

- Performance for Large-Scale Training: While the Versal portfolio provides substantial acceleration for AI inference, it still lags behind GPUs or TPUs in terms of raw compute power, particularly when it comes to large-scale training of deep learning models. Versal devices are highly effective for edge AI and real-time inference, but they may not be suitable for training large, complex models from scratch.

- Hardware Development Complexity: Although the Vitis AI tools simplify the development process for AI workloads, optimizing the full end-to-end system still requires an understanding of hardware design. While programmable logic can deliver throughput and latency advantages for preprocessing and sensor fusion, the need for FPGA design expertise may pose a barrier to adoption compared to more straightforward GPU-based development workflows.

3. Versal AI Edge Series Portfolio: Two Generations of Adaptive SoCs Focused on Edge AI

Within the Versal portfolio, the Versal AI Edge Series portfolio is AMD recommended focus for edge AI applications. The first-generation Versal AI Edge Series, announced in 2021, feature AI Engines optimized for machine learning and is targeted for use cases requiring optimization of both sensor processing and inference.

In 2024, AMD introduced the Versal AI Edge Series Gen 2, an extension of the Versal AI Edge Series portfolio, which enables full end-to-end acceleration of sensor processing, inference, and control/postprocessing for edge AI systems.

Enhanced Capabilities of the Versal AI Edge Series Gen 2

-

- Higher Efficiency AI Engines: Versal AI Edge Series Gen 2 devices offer updated AI Engines optimized for machine learning, with new native datatype support and improved energy efficiency. These improvements make the Versal AI Edge Series Gen 2 an even better solution for AI inference tasks, particularly for deep learning models that require substantial computational resources.

- Upgraded Embedded CPUs: In addition to updating the AI Engines, the Versal AI Edge Series Gen 2 also offers significantly more powerful embedded Arm® cores. Versal AI Edge Series Gen 2 devices feature up to 8x Arm Cortex®-A78AE application cores and up to 10x Cortex-R52 real-time cores, providing the scalar computing power required to accelerate postprocessing/control workloads in addition to sensor processing and inference tasks.

- Improved Power Management: Power management is particularly important for edge AI applications, where power constraints are often critical. The Versal AI Edge Series Gen 2 has been designed with restrictive operating conditions in mind and delivers improvements in power management, including voltage ID support, power gating options within the embedded CPUs, and greater flexibility to operate different compute engines at different voltages.

- Portfolio Scalability: AMD positions the Versal AI Edge Series Gen 2 as a complement to the first-generation Versal AI Edge Series, rather than serving as a direct replacement. For applications without a high-performance scalar compute requirement, the first-generation Versal AI Edge Series offers a broader range of AI compute densities, particularly on the low end. However, the Versal AI Edge Series Gen 2 expands the upper range of use cases served by the broader Versal AI Edge Series portfolio to those that require true end-to-end system acceleration in a single adaptive SoC. Together, this combined portfolio of devices offers users the flexibility to balance power versus performance in systems designed for demanding environments.

Conclusion: Choosing the Right FPGA or Adaptive SoC for AI Workloads

The comparison of the UltraScale and Versal architectures, including both generations of the Versal AI Edge Series portfolio, highlights the evolution of FPGA technology from a general-purpose acceleration platform to one specifically optimized for AI and ML workloads. While UltraScale and UltraScale+ FPGAs and adaptive SoCs offer excellent parallel processing capabilities, they lack the specialized hardware required for efficient AI acceleration. The Versal architecture introduces the AI Engines and new AI development workflows, addressing the limitations of the UltraScale architecture for AI workloads. Within the Versal portfolio, the Versal AI Edge Series Gen 2 builds on the foundation of the Versal architecture with improved AI Engine efficiency, power management, and additional embedded computing power.

For developers building edge AI systems, Versal devices, particularly the Versal AI Edge Series portfolio, represent the most comprehensive and optimized platform, offering the best performance, ease of use, and scalability. UltraScale and UltraScale+ FPGAs and adaptive SoCs remain a solid choice for developers with existing UltraScale designs or those requiring more general-purpose acceleration capabilities, but for AI-specific tasks, the newer Versal architecture provides substantial advantages.